Difference between revisions of "The Arjuna Compiler"

m (moved The Compiler to The Arjuna Compiler) |

m (8 revisions imported) |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<metadesc>Mesham is a type oriented programming language allowing the writing of high performance parallel codes which are efficient yet simple to write and maintain</metadesc> | <metadesc>Mesham is a type oriented programming language allowing the writing of high performance parallel codes which are efficient yet simple to write and maintain</metadesc> | ||

== Overview == | == Overview == | ||

| + | |||

| + | ''' This page refers to the [[Arjuna]] line of compilers which is up to version 0.5 and is legacy with respect to the latest [[Oubliette]] 1.0 line''' | ||

Although not essential to the programmer, it is quite useful to know the basics of how the implementation hierachy works. | Although not essential to the programmer, it is quite useful to know the basics of how the implementation hierachy works. | ||

| Line 8: | Line 10: | ||

<center>[[Image:overview.jpg|Overview of Translation Process]]</center> | <center>[[Image:overview.jpg|Overview of Translation Process]]</center> | ||

| − | The resulting executable can be thought of as any normal executable, and can be run in a number of ways. In order to allow for simplicity the user can execute it by double clicking it, the program will automatically spawn the number of processors required. Secondly the executable can be run via the mpi deamon, and may be instigated via a process file or queue submission program. It should be noted that, as long as your MPI implementation supports multi-core (and the majority of them do) then the code can be executed properly on a multi core machine, often with the processes wrapping around the cores (for instance 2 processes on 2 cores is 1 process on each, 6 processes on 2 cores is 3 processes on each etc...) | + | The resulting executable can be thought of as any normal executable, and can be run in a number of ways. In order to allow for simplicity the user can execute it by double clicking it, the program will automatically spawn the number of processors required. Secondly the executable can be run via the mpi deamon, and may be instigated via a process file or queue submission program. It should be noted that, as long as your MPI implementation supports multi-core (and the majority of them do) then the code can be executed properly on a multi core machine, often with the processes wrapping around the cores (for instance 2 processes on 2 cores is 1 process on each, 6 processes on 2 cores is 3 processes on each etc...) |

== Translation In More Detail == | == Translation In More Detail == | ||

Latest revision as of 15:44, 15 April 2019

Contents

Overview

This page refers to the Arjuna line of compilers which is up to version 0.5 and is legacy with respect to the latest Oubliette 1.0 line

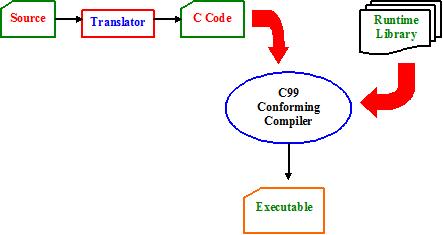

Although not essential to the programmer, it is quite useful to know the basics of how the implementation hierachy works.

The core translator produces ANSI standard C99 C code which uses the Message Passing Interface (version 2) for communication. Therefore, on the target machine, an implementation of MPI, such as OpenMPI, MPICH or a vendor specific MPI is required and will all work with the generated code. Additionally our runtime library (known as LOGS) needs to be also linked in. The runtime library performs two roles - firstly it is architecture specific (and versions exist for Linux, Windows etc..) as it contains any none portable code which is needed and is also optimised for specific platforms. Secondly the runtime library contains functions which are often called and would increase the size of generated C code.

The resulting executable can be thought of as any normal executable, and can be run in a number of ways. In order to allow for simplicity the user can execute it by double clicking it, the program will automatically spawn the number of processors required. Secondly the executable can be run via the mpi deamon, and may be instigated via a process file or queue submission program. It should be noted that, as long as your MPI implementation supports multi-core (and the majority of them do) then the code can be executed properly on a multi core machine, often with the processes wrapping around the cores (for instance 2 processes on 2 cores is 1 process on each, 6 processes on 2 cores is 3 processes on each etc...)

Translation In More Detail

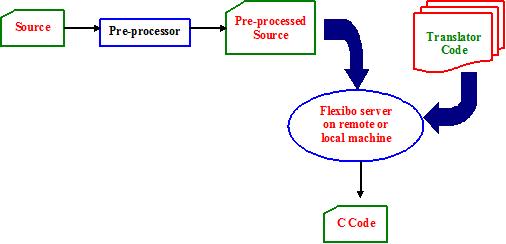

The translator itself is contained within a number of different phases. Firstly, your Mesham code goes through a preprocessor, written in Java, which will do a number of jobs, such as adding scoping information. When this is complete it then gets sent to the translation server - from the design of FlexibO, the language we wrote the translator in, the actual translation is performed by a server listening using TCP/IP. This server can be on the local machine, or a remote one, depending exactly on the setup of your network. Once translation has completed, the generated C code is sent back to the client via TCP/IP and from there can be compiled. The most important benefit of this approach is flexibility - most commonly we use Mesham via the command line, however a web based interface also exists, allowing the code to be written without the programmer installing any actual software on their machine.

Command Line Options

- -o [name] Select output filename

- -I[dir] Look in the directory (as well as the current one) for preprocessor files

- -c Output C code only

- -t Just link and output C code

- -e Display C compiler errors and warnings also

- -s Silent operation (no warnings)

- -f [args] Forward Arguments to C compiler

- -pp Just preprocess the Mesham source and output results

- -static Statically link against the runtime library

- -shared Dynamically link against the runtime library (default)

- -debug Display compiler structural warnings before rerunning

Static and Dynamic Linking Against the RTL

The option is given to statically or dynamically link against the runtime library. Linking statically will actually place a copy of the RTL within your executable - the advantage is that the RTL need not be installed on the target machine, the executable is completely self contained. Linking dynamically means that the RTL must be on the target machine (and is linked in at runtime), the advantage to this is that the executable is considerably smaller and a change in the RTL need not result in all your code requiring a recompile.